クラウド対応 ADC

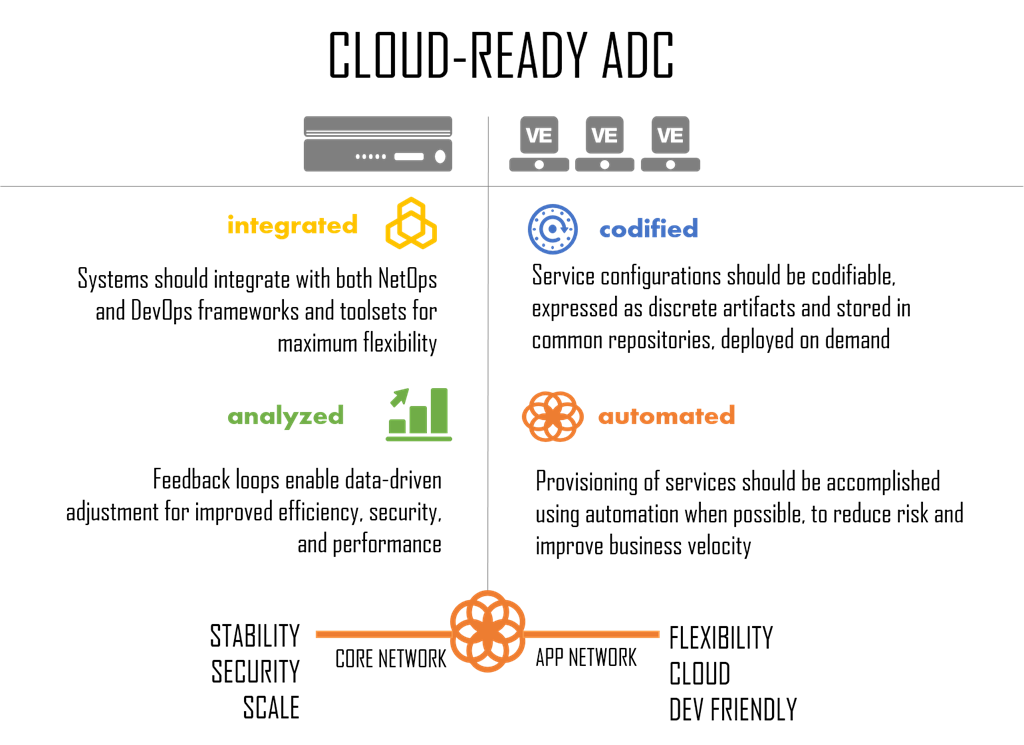

クラウド対応のアプリケーション配信コントローラ (ADC) は、従来の ADC とは異なります。カスタム ハードウェアまたは COTS ハードウェアに導入できる、アプリケーションの高速かつ安全で可用性の高い配信と導入の両方のニーズをサポートするスケーラブルなソフトウェア ソリューションです。 クラウド対応の ADC は、従来の安定性、セキュリティ、スケールと、最新の柔軟性、クラウド、DevOps 対応のプログラム機能を組み合わせた、データセンター アーキテクチャに対する最新の 2 層アプローチを可能にします。

素晴らしい説明ですが、どういう意味ですか?

最新のアプリケーション プロキシと見なされるためには何が必要かについては以前にも書きましたが、これは確かにクラウド対応の ADC に必要なものの一部ですが、今日の要求の厳しい環境では、技術的な機能だけでなく、運用とエコシステムの統合、そして最新の新しいアプリケーション アーキテクチャをサポートする機能も必要です。

正直なところ、クラウド対応や、従来のアプリケーション アーキテクチャと新しいアプリケーション アーキテクチャの両方のサポートなど、同様の主張をする ADC は数多く存在します。 そこで今日は、クラウド対応 ADC が従来の ADC と何が違うのかを詳しく調べてから、今日のアプリケーションの世界でそれがなぜそれほど重要なのかをさらに詳しく調べたいと思います。

5段階の差別化

クラウド対応 ADC が従来の ADC と異なる点は、基本的に 5 つ (厳密に言うと 6 つ) あります。また、私が入社するずっと前から F5 は ADC がどうあるべきかをほぼ定義していたため、私たちは他の誰よりも ADC をよく理解していると言えます。 ビジネスニーズを満たすスピード、セキュリティ、安定性を備えたアプリを継続的に提供するために、必要なこと、そして進化しなければならないことの両方です。

パフォーマンス

これは考えるまでもないですよね? 結局のところ、アプリの前に座ってそれらのプロキシを実行する場合は、配信するアプリよりも高速 (または、さらに高速) である必要があります。 従来の ADC はアプライアンス フォーム ファクタで提供されます。 シャーシ、ハードウェア、アプライアンス。 しかし、従来の ADC は、動作させるカスタム ハードウェア内の CPU によって制限されてきました。 ADC はプラットフォームであるため、汎用 CPU が一般的な処理に重点を置いているのと同様に、一般的な速度に重点を置く傾向があります。 しかし、ほとんどの場合、展開前に決定する必要があるさまざまなハードウェア アクセラレーション オプションが含まれます。 今では私たちは気が狂っているかもしれませんが、クラウド対応の ADC は、あるアプリが他のアプリよりも特定のサービスを必要とする可能性があり、また別のアプリが他のアプリよりも特定のサービスを必要とする可能性があることを理解し、その制限を超えられるはずだと考えています。 クラウド コンピューティングの概念全体の基盤となる、オンデマンドのコンピューティングの再利用という概念と同様に、あらゆる種類のハードウェアに投資する組織も、それを再利用できる必要があります。

ネットワーク上に階層化されたサービスでこれが重要な理由は、一般的なタスクを特定のハードウェア (FPGA) にオフロードすることで、CPU が解放され、リクエスト/レスポンスの検査、変更、スクラブなどの他の作業を実行できるようになるためです。これにより、プロキシでの「停止」全体が高速化され、レイテンシが短縮され、ユーザーの満足度が向上します。

そのため、クラウド対応の ADC は、ソフトウェア定義のパフォーマンスを活用し、組織がセキュリティなどの特定の種類の処理に対して ADC のパフォーマンスをプログラムで向上できるようにすることで、障壁を打ち破ることができます。 つまり、組織のニーズが変化した場合に、そのハードウェアのパフォーマンス プロファイルもそれに応じてオンデマンドで変更できるということです。 それがハードウェアの俊敏性です。 わかっています、矛盾していますよね? しかし、これはクラウド対応 ADC の一部であり、投資を保護し、高価なフォークリフト アップグレードの必要性を排除できるように、特殊なハードウェアを再利用できる機能です。

プログラミング可能性

私が個人的に顧客から何度も聞いてきた長年の不満は、スクリプト機能に関して NetOps と DevOps の間に不一致があることです。 問題は、従来の ADC では最も基本的なスクリプトしか提供されておらず、DevOps ではなく NetOps に馴染みのある言語でしか提供されていなかったことです。 現在、DevOps では、データ パスでのスクリプトの活用を積極的に学んでいます。スクリプトによって、さまざまなより機敏な要求/応答ルーティング、スケーリング戦略、さらにはセキュリティ サービスも実現できるからです。 しかし、従来の ADC がコア ネットワークの上流に配置されているため、NetOps はトラフィックの流れを妨げる可能性のあるスクリプトを DevOps に展開させるつもりはありませんでした。

仮想エディションが利用可能になったことで、DevOps は仮想マシンの形で独自の個人用プライベート ADC にアクセスできるようになりましたが、それでもネットワーク スクリプト言語を学習する必要がありました。 それは良いことではありません。 クラウド対応の ADC は、クラウドと同様に、コア ネットワークの NetOps とアプリ ネットワークの DevOps の両方をサポートする必要があります。 そして、DevOps と開発者は特に、node.js のような開発者に優しい言語を好みます。 言語を理解しているというだけでなく、開発者に優しいインフラストラクチャとのより迅速な統合を可能にする豊富なライブラリのリポジトリ ( NPMなど) とサービスがすでに存在しています。 そのため、クラウド対応の ADC は両方をサポートする必要があります。

アプリのセキュリティ

従来の ADC は長年にわたり基本的なアプリケーション セキュリティを提供してきました。 アプリ、特に Web アプリの前に座っているということは、データ センター内で攻撃を認識して対処できる最初の存在であることを意味します (現在もそうです)。 当然のことながら、そのセキュリティの大部分はプロトコル レベルのセキュリティを中心に展開されていました。 SYN フラッド保護、DDoS 検出、およびその他の TCP および基本的な HTTP 関連のセキュリティ オプションは、これまで長い間、従来の ADC に不可欠な要素となってきました。

しかし、クラウド対応の ADC はさらに進化する必要があります。 これらは、今日のアプリケーションに必要な必然的なスケールを確保するために、アプリ アーキテクチャ自体に組み込まれる数少ない「デバイス」の 1 つになる可能性が高いため、フル スタック セキュリティに同様に重点を置くことは理にかなっています。 少なくとも私たちはそう考えています。特にそうすることでパフォーマンスが向上するからです。 1 種類の処理のために停止する必要がある場合は、できるだけ多くの処理を同時に実行するのが理にかなっています。 より効率的で、ボックスからボックスへ移動するために必要な遅延がなくなります。 子供を連れて長距離を旅行したことがある人なら、私が何を言っているか分かるでしょう。 ガソリンスタンドにトイレがあります。 5分後にまた休憩所に立ち寄りたくないですよね? ネットワークのセキュリティについても同様です。 一度にできる限りのことをして、顧客と企業の双方にとっての不満の原因となることが多い諸経費を削減します。 パフォーマンスは非常に重要であり、クラウド対応の ADC は、より高速なアプリ エクスペリエンスを実現するためにあらゆる手段を講じる必要があります。

これは、モバイル デバイスとアプリ間の安全な通信をサポートするための高まる需要 (場合によっては要件) を管理するための新しいモデルを提供することを意味する場合があります。 つまり、Forward Secrecy (FS) とそれを可能にする暗号化がサポートされるということです。 これは、マイクロサービスや新しいアプリケーションをサポートするために必要なアーキテクチャを壊すことなく、計算コストの高い暗号化と復号化の処理を、それを処理するために設計されたハードウェアにオフロードするための新しいモデルを有効にすることを意味します。 クラウド対応の ADC は両方をサポートし、今日のアーキテクチャとクラウド環境にシームレスに統合しながら、高速かつ安全な通信を可能にする必要があります。

パートナーエコシステムと自動化

その他の API エコノミーは、プライベート クラウドの成功と、自動化とオーケストレーションの強化によってビジネスにもたらされる競争上の優位性にとって重要です。 しかし、どんなに素晴らしいことでも、データ センターには常にさまざまなベンダーからのさまざまなサービスが存在し、それぞれに異なる統合とオブジェクト モデルが備わっています。 これは多くの場合、必要なすべてのコンポーネントにわたる専門知識を必要とする、面倒な API 駆動型の自動化を意味します。 現実には、オーケストレーション用と自動化用の 2 つのレベルの統合が必要です(同じものではありません) 。 API とテンプレートを介したプラットフォーム固有の自動化機能があり、さらにオーケストレーションが優先されるパートナー エコシステムへのネイティブ統合もあります。 クラウド対応の ADC には、どちらも必要です。前者は、Puppet や Chef などの独自製品またはカスタム スクリプトやフレームワークによる自動化を保証し、後者は、OpenStack、VMware、Cisco などのますます重要になっているコントローラーによるオーケストレーションを可能にします。

クラウド対応の ADC は、包括的なオーケストレーション ソリューションを提供するために使用されているフレームワークとシステムを通じて、ネイティブに統合され、オーケストレーションを提供する必要があります。

クラウド対応の ADC はクラウド テンプレートをサポートする必要があることは、何度も言うまでもありません。

私が言いたいのはこれだけです。

アプリモデル

従来の ADC は、従来のアプリケーションに堅牢な一連のサービスを提供する必要性から生まれました。 いわゆるモノリスです。 今日のアプリケーションは、さまざまなアーキテクチャ、プラットフォーム、展開モデルを活用し、従来のアプリケーションよりも分散化、多様化、および異なるものになっています。 コンテナや仮想マシン、クラウドやクラウドのような環境のいずれであっても、アプリケーションには依然としてそれらのサービスの一部が必要です。 また、一度に複数のサービス (負荷分散やアプリのセキュリティなど) が必要な場合は、ADC が必要になります。従来の ADC はマイクロサービスには適さないかもしれませんが、クラウド対応の ADC は適しています。 これには、負荷分散とセキュリティの恩恵を受ける可能性のある幅広いサービスが含まれます。これらのサービスは、 memcachedのように、netops ではなく devops の領域に完全に属します。

クラウド対応の ADC は依然としてプラットフォームですが、従来のアプリと新しいアプリの両方に必要なサービスを、必要な環境で提供するために必要な、プログラミング性、統合性、フォーム ファクターの要件をサポートします。

従来の ADC とクラウド対応 ADC には多くの類似点があります。どちらも共通の運用モデルで複数のサービスを実現できるプラットフォームです。 どちらもプログラム可能で、他のデータセンターやクラウド システムと統合でき、パフォーマンス機能の柔軟性を実現します。 しかし、クラウド対応の ADC は従来の ADC を超え、ビジネスとアプリケーションの将来に対応し、多種多様なインフラストラクチャや環境との統合と相互運用に対するますます多様化するニーズにも対応します。