As artificial intelligence (AI) continues to advance, the demand for high-performance hardware is skyrocketing. Organizations are finding it increasingly difficult to keep pace with the computing power required to run complex AI models and workloads. This is where GPU as a Service (GPUaaS) comes in.

By offering on-demand access to powerful graphics processing units (GPUs) over the cloud, GPUaaS is transforming the way businesses approach AI infrastructure. It eliminates the need for costly hardware investments, allows seamless scaling, and integrates smoothly with existing cloud services—all while simplifying operations. But how exactly does GPUaaS work, and why is it becoming the go-to solution for AI-driven organizations?

Unlocking AI potential in ASEAN

In the Association of Southeast Asian Nations (ASEAN), the GPUaaS market is expanding as more players enter the space to address specific regional challenges. One key factor driving this growth is language. Open-source large language models (LLMs) are predominantly trained in English, and they often struggle with local languages that are rich in cultural nuances. As a result, organizations need to re-train or fine-tune these models with local data to ensure more accurate and relevant responses in native languages.

At the same time, the benefits of using GPUaaS are helping fuel its adoption. Scalability allows users to effortlessly adjust GPU resources based on project needs. While elasticity, through a pay-per-use model, enables organizations to reduce overall expenses by only paying for what they use. GPUaaS also grants immediate access to cutting-edge technology, facilitating rapid prototyping and deployment that increases flexibility and reduces time to market.

Another important consideration is data gravity, residency, and sovereignty. Data gravity refers to the tendency of data to attract applications and services to its location for better performance and efficiency. In many cases, data must reside in specific locations due to residency and sovereignty regulations, which means that GPUaaS providers need to be located near their user bases. Sovereign AI, which emphasizes a nation's ability to develop AI using its own infrastructure, data, and resources, also plays a significant role in shaping the demand for localized GPUaaS.

Lastly, the cost and limited supply of GPUs within cloud service providers (CSPs) in ASEAN are considering factors when it comes to adopting GPUaaS. According to a recent Dell report, on-premises AI deployments can yield up to 75% savings compared to CSP-based solutions. GPUaaS offers a cost-efficient alternative, allowing organizations to access high-performance GPUs without investing in a significant amount of hardware upfront, making it an attractive option for those seeking to scale their AI capabilities in the region.

Balancing the benefits and risks of GPUaaS

While the benefits of GPUaaS help drive its widespread adoption, they also bring their own set of concerns. One key issue is data security, as data transmitted to and from GPUs can be vulnerable to interception or unauthorized access. Furthermore, processing data on remote GPUs may involve navigating varying data protection regulations and compliance requirements. Another concern is performance, where reliance on Internet, or private connectivity, and fluctuating GPU performance could affect application speed and responsiveness. GPUaaS depends on stable, high-speed connections, often favoring private networks over public Internet for optimal performance.

How does F5 help?

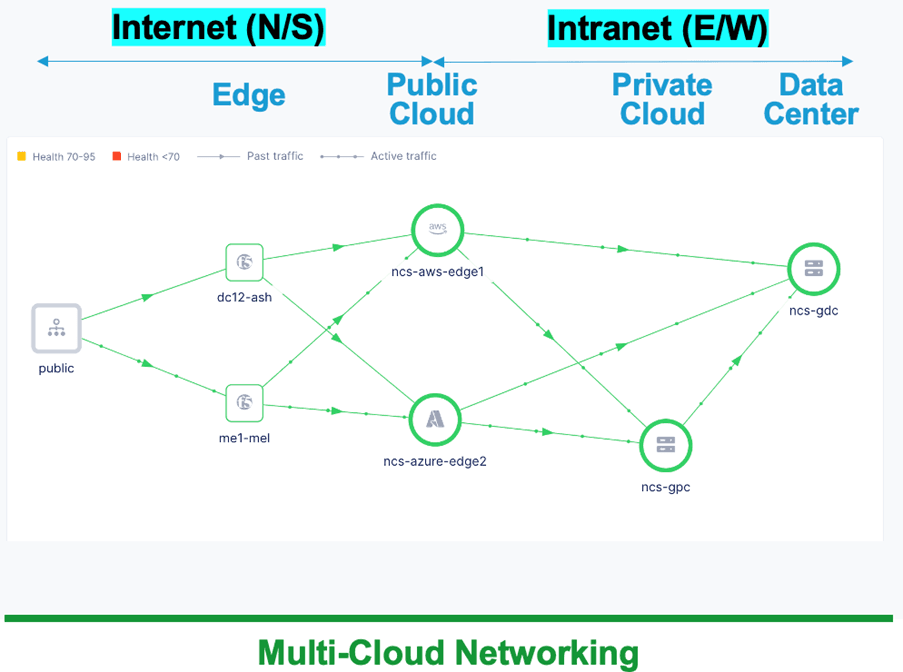

F5 offers innovative, multicloud SaaS-based networking, traffic optimization, and security services for public and private clouds, including GPUaaS providers, through a single console.

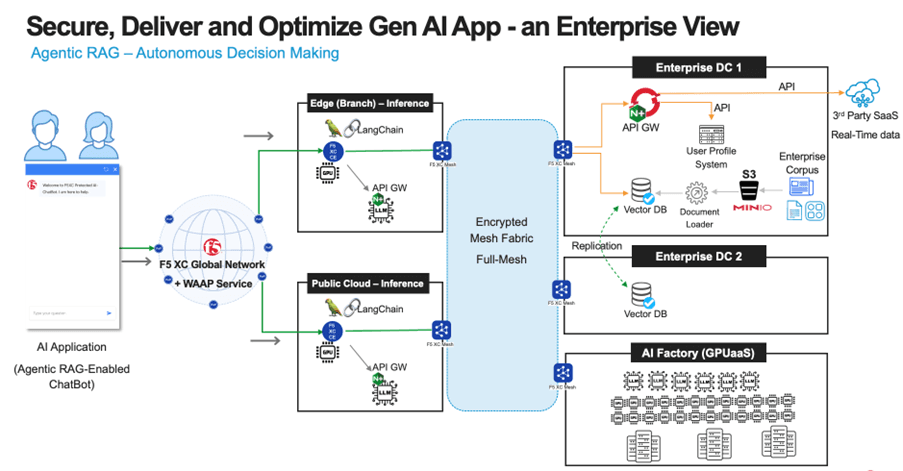

By forming an encrypted mesh fabric overlay on top of any network, organizations can connect to a GPUaaS provider (AI factory) for AI inference, embedding, or training. With full network and application segmentation, all overlay connectivity is private and secure, built on top of an existing network underlay. In addition, F5 encrypted mesh fabric addresses digital resiliency challenges by dynamically monitoring, detecting, optimizing, and delivering traffic to those healthy AI components—ensuring your AI applications are always up and available.

Below is an example of an LLM retrieval augmented generation (RAG) deployment, leveraging an AI factory from a GPUaaS provider. As data is safely transported across the secure mesh with encryption, there is no concern about data in transit; there is also no data at rest happening at the GPUaaS provider. An organization’s corpus data at rest remains at the original location without any change. This architecture also allows for AI inference to take place for latency-sensitive applications at the edge (a public cloud or even branch offices).

If the AI application (such as an agentic RAG-enabled chatbot) is made accessible from the Internet, it is important to consider a cloud-network-based web app and API protection (WAAP) service to protect the AI application from cyberattacks.

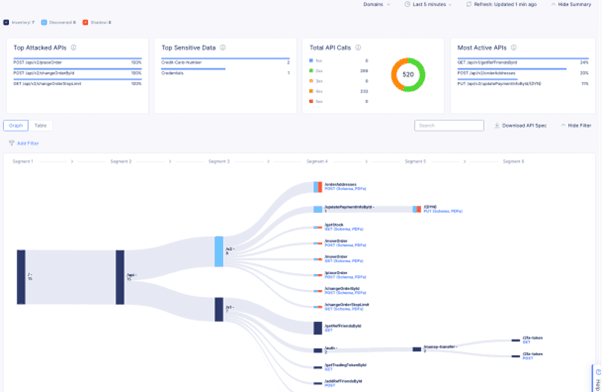

By leveraging a platform with a single management console, it is now possible to have compliance, observability, and control of all traffic, including APIs traversing North/South and East/West across the encrypted mesh fabric.

Moreover, traffic over the public Internet and the private encrypted mesh needs to be observable and controllable by NetOps and SecOps teams to manage what is essentially a complex and heterogeneous multicloud infrastructure.

Keen to learn more?

As AI adoption accelerates, building resiliency into AI systems is critical to ensure long-term success. GPUaaS offers a scalable and efficient solution, but organizations must navigate challenges such as data security, performance variability, and regulatory compliance. By addressing these concerns and leveraging the flexibility of GPUaaS, businesses can better position themselves to meet the growing demands of AI-driven workloads.

If you want to explore how AI resiliency can empower your organization, visit us at the upcoming GovWare conference at Booth P06, October 15 – 17, at Sands Expo and Convention Centre where we’ll be discussing these trends and solutions in more detail.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.