Introduction

Albert Einstein’s quote seems more prescient than ever. Cybersecurity predictions seem to fall into one of two buckets: they are either utterly wild with the deliberate aim of courting some attention from the media, or they seem overly safe and almost bound to happen.

For the fifth consecutive year, F5 Labs is stepping up to forecast some of the most concerning trends that could shape the cybersecurity landscape over the next twelve months. We do our best to not be deliberately outlandish with our predictions, but it does sometimes feel like we’re stating the obvious, even with some of the bolder claims we make. In reality, while these articles are a little fun for the end of the year, our intent is to highlight some of the trends we think CISOs and business leaders should be aware of.

Looking Back to 2024, How Did We Do?

Before we kicked off our 2024 Cybersecurity Predictions we self-graded our predictions from 2023 and were shocked and delighted to have scored a modest 100% success (absolutely no shenanigans or wrong doing at all)!

So how did we fare with last year’s predictions? Some of these prophecies seem to be rather more difficult to prove than others, but let’s take a quick look regardless.

Nation-States Will Use Generative AI for Disinformation – TRUE

Disinformation is table-stakes for many governments, and campaigns to divide and demoralize foreign nationals is nothing new. The widespread use of generative AI, however, has raised the bar in the quality of fake content being created, as we saw in the recent US presential elections.

Attackers Will Improve Their Ability to Live Off the Land – TRUEish

Using tools built-in to an operating system, such as Windows, is much stealthier than installing malware on a victim’s device. That said, APT28 showed the world what “living off the land” really looks like when they jumped from one organization’s network to the real victim’s network using WiFi access points.1 This attack was discovered to have first started in 2022, but was only made public in November 2024, so I think we can claim victory for this prediction. Can’t we?

Advances in Generative AI Will Let Hacktivism Grow – TRUE

Granted, this was an easy win. It was, however, still a point worth making as loudly as possible. The ease with which tools can be used does mean that less skilled, but no less motivated, attackers can make use of them. Another recent example from the US election was the use of faked audio to convince democratic voters to stay at home and not vote.

LLLMs (Leaky Large Language Models) – TRUE

The AI ‘goldrush’ is in full swing, with organizations tripping over themselves to embed LLMs into the very core of their business. As with every single new protocol and technology, however, the fundamentals of information security are ignored lest it slow down the pace of development. The result? LLMs are leaking personal and sensitive information all over the web.2

Web Attacks Will Use Real-Time Input from Generative AI – TRUEish

While there has been no evidence of this being used in the wild (and evidence will likely be very hard to come by) there has been proof that gen AI is capable of autonomously attacking websites. We build upon this previous prediction this year. See Prediction 1: AI Powered Botnets for more information.

Generative Vulnerabilities – TRUE

Can genAI ever write secure code? Undoubtedly. Does it currently write secure code which can be completely trusted and not reviewed by a human? Perhaps not. According to multiple academic studies cited by SC Media, AI created code was 41% more likely to create vulnerable code.3 Some of the studies mentioned are now a few years old and we have to assume that current LLMs are producing better code but the point remains – AI should not be blindly trusted to create secure applications.

Organized Crime Will Use Generative AI with Fake Accounts – TRUE

Also found in TrendMicro’s article is evidence of organized crime selling the creation of deepfakes. Threat actors sell fake profiles and make $10 per image and up to $500 per minute of video. The incentive to use such profiles go beyond disinformation, however. Many banks and cryptocurrency exchanges have Know-Your-Customer (KYC) verification systems. Stolen IDs and deepfake media are being used in an attempt to circumvent these security measures.4

Generative AI Will Converse with Phishing Victims – TRUEish

Since so many of us use genAI to help us rewrite the words we speak in our native tongues, it really was no surprise that threat actors from countries beyond those of their victims would use LLMs to help amend their malicious narratives. TrendMicro’s article also shows evidence of LLMs being bundled in to phishing toolkits, such as GoMailPro, allowing attackers to perform rewrites and translations without even having to hop over to ChatGPT!5

I’m not going to say that we were necessarily wrong with the final two predictions, but these were certainly harder to prove. That said, we’ve had enough wins recently than we don’t mind owning up to a few fails.

- Attacks on the Edge

- Cybersecurity Poverty Line Will Become Poverty Matrix

Final score: 80%(ish)

The Artificial Internet

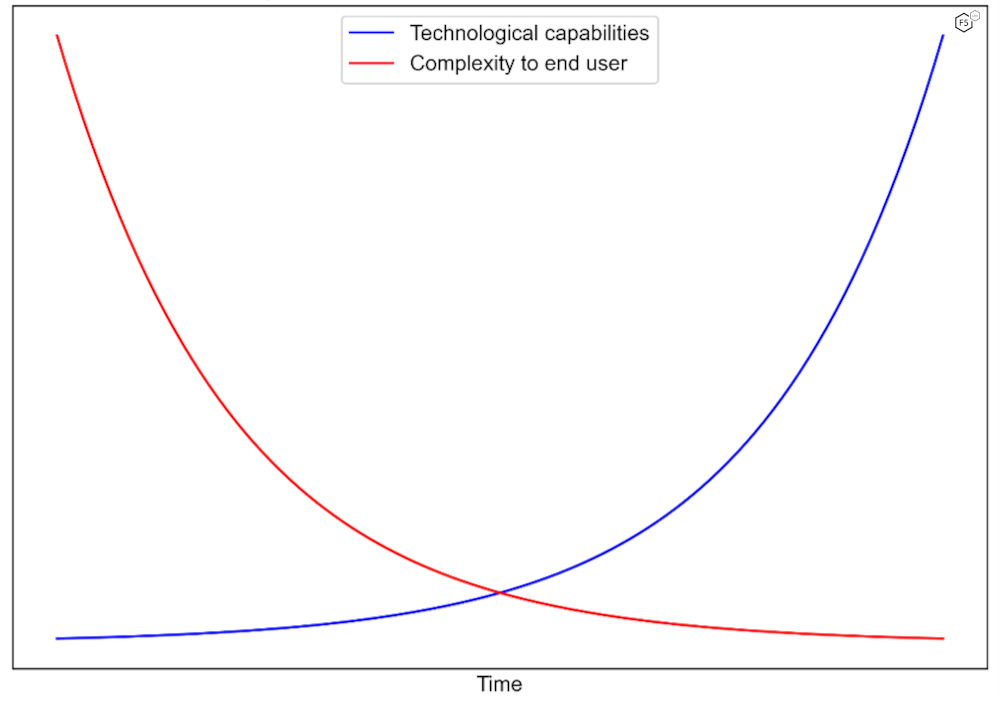

The paradox of an evolving cybersecurity landscape is such that while technological capabilities advance at an exponential rate, the skills required to operate it decrease at a similar pace. Advanced tools and complex attacks, once the domain of highly skilled academic researchers or well-funded nation-states, quickly find their way into the hands of anyone, requiring little more effort than tapping a simple button.

Figure 1: Comparing technology with its ease of use.

Current AI systems, predominantly generative pretrained transformers (GPT), are a perfect example of this democratization of advanced technology. GPT systems are so complex that many data scientists are still not entirely sure how they work.6 Despite this overwhelming complexity, anyone with a computer or mobile phone can create artificial text, images, voice, and video at a whim. Every organization on the planet is racing to implement AI, lest their competitors get there first, so it should come as no surprise that threat actors are doing the same. From improving their phishing scams and simplifying their workflows to increasing profits and automating attacks, criminals are leveraging AI to enhance every aspect of their operations. This widespread adoption of AI by both legitimate businesses and threat actors creates a complex security landscape where the technology simultaneously serves as both a defensive tool and an attack vector.

Prediction 1: AI Powered Botnets

For years F5 Labs has been tracking Thingbots: largescale botnets comprised of consumer grade IoT devices. The situation hasn’t improved over the past 8 years and in early 2024 our sensor network picked up an alarming spike in scans aiming to exploit CVEs associated with home routers. So far, these botnets are primarily used to launch DDoS attacks and build out residential proxy networks. We predict that things are about to get far worse, however.

In February 2024, researchers at Columbia University published a paper describing their ability to teach AI (LLM agents) how to autonomously attack websites.7 Until recently, most people assumed that threat actors would use AI to help them write phishing lures or create malicious code for use in malware. Now it seems possible for an AI agent to be asked to find an unknown vulnerability in a web application or API and exploit it, entirely without human intervention.

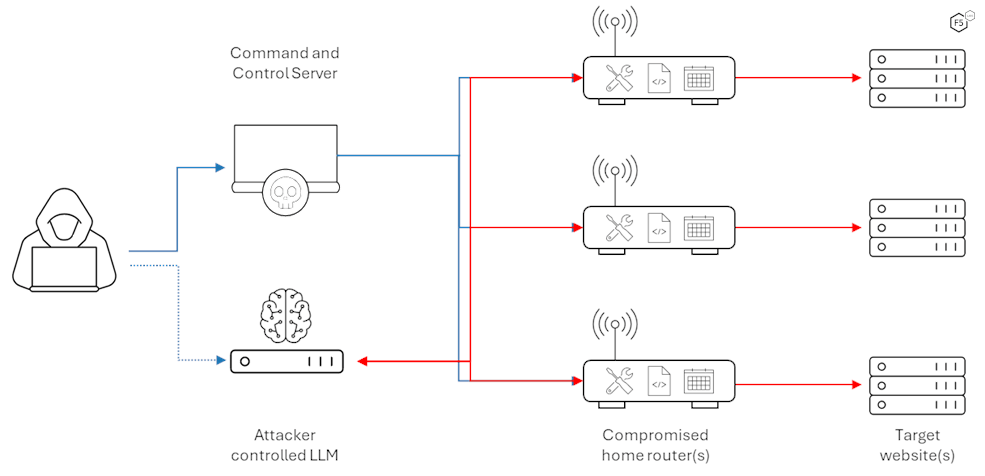

As we covered above, we had correctly predicted this worrying evolution of malicious use of AI in our 2024 Cybersecurity Predictions article - if not in execution, then at least in capability. Now we are predicting the large-scale weaponization of this technique. Threat actors could combine autonomous web-hacking LLMs with vast botnets of compromised routers, allowing them to launch active attacks from hundreds of thousands of devices at the same time. While each individual router might lack the computational power to run its own AI application, attackers could leverage API calls from the routers to an attacker-controlled LLM. This setup could enable a highly coordinated attack in which thousands of websites are targeted simultaneously by autonomous hacking efforts from a massive network of compromised devices.

Figure 2: Possible architecture of attackers turning botnets into a fleet of autonomous hacking devices.

With AI amplifying their capabilities, the speed and sophistication of these attacks would be unprecedented, making them extremely difficult to mitigate.

Prediction 2: Putting the AI Into API

We are currently in a global "AI race condition," where organizations—from startups to nation-states—are racing to adopt AI-driven technologies at unprecedented speeds, fearing that "if we don't, 'they' will." This frenzied adoption is creating a dangerous feedback loop: the more AI is used, the more complex the ecosystem becomes, requiring AI to manage that very complexity. Amid this rush, a critical vulnerability is emerging—the APIs that serve as the backbone of AI systems.

APIs are essential for AI operations, from model training to deployment and integration into applications. Yet, the rapid adoption of AI is outpacing the ability of organizations to secure these APIs effectively. Many remain unaware of the full scope of their API ecosystems with current estimates suggesting that nearly 50% of APIs remain unmonitored and unmanaged. This exposes organizations to risks that are amplified by the sensitive data processed by large language models (LLMs).

We have already seen much evidence of LLMs leaking sensitive information via the front door – the AI application itself. However, hastily developed AI applications will also expose vast quantities of sensitive personal data via poorly secured APIs. As F5’s Chuck Herrin aptly notes, "a world of AI is a world of APIs." Organizations that fail to secure their API ecosystems will not only invite breaches but risk undermining the very trust they are trying to build with AI.

In the coming year, we fully anticipate huge amounts of personal and company data to be stolen via an API. Based on previous breaches and attacker trends, we expect to see the Healthcare industry hit and the targeted API to exploit a lack of authorization controls and rate limiting.

Prediction 3: Attackers Use AI to Discover New Vulnerabilities

In November 2024, Project Zero announced that AI had, for the first time, discovered an unknown (0-day) vulnerability.8 Google’s team of security researchers are responsible for discovering countless vulnerabilities in software products but, until recently, had done so with traditional security testing tools and techniques. Their announcement showed how their AI-powered framework had found a stack buffer underflow in the widely popular database system SQLite. This vulnerability was previously undetected despite SQLite being rigorously tested using traditional techniques, such as fuzzing.

This advancement is incredibly exciting for security researchers as it promises to drastically improve the speed and efficacy of their work. However, whilst we expect to hear many more announcements from similar efforts by researchers over the coming year, it seems far less likely that threat actors will announce their own use of AI vulnerability discovery tools. It seems only too likely that well funded nation-states have already begun efforts to discover vulnerabilities which they will keep to themselves for use with espionage and active cyber attacks. As these AI-powered tools become commoditized we can expect organized crime groups to follow suit.

As always, we find ourselves in an arms race. Can security researchers use AI to discover and patch vulnerabilities before threat actors are able to exploit them?

Prediction 4: AI Beats Quantum Cracking Crypto

Quantum computers remain a looming threat to traditional cryptographic systems like RSA, but their practical ability to break modern encryption standards is still in its infancy. A recent example comes from Chinese researchers who used quantum computing to factor 50-bit integers, a far cry from the 2048-bit RSA keys currently considered a minimum for use with TLS. Although their method demonstrates technical advancements in quantum algorithms, it is not yet scalable to break the exponentially larger keys used in practice. Experts suggest that it will likely require quantum computers with millions of qubits—far beyond current capabilities—to challenge RSA encryption directly.

Perhaps it is no surprise, then, that AI is emerging as the most significant threat to cryptographic security, not by directly breaking encryption algorithms, but by exploiting vulnerabilities in their implementation. Machine learning models have already been successfully applied to drastically reduce the time required to recover hashed passwords, and significantly improve the accuracy and speed of recovering AES keys from a computer’s RAM.9 These so-called “side-channel” attacks focus not on cryptographic primitives (such as algorithms like AES), but on the “noise” created by their use. A threat actor who is able to monitor and analyze physical signals, such as power consumption, electromagnetic emissions, or processing times, can infer information from that and use it to reconstruct the secret key.

The more cryptographers and manufacturers tighten up side-channel emissions the more we will depend on AI to help analyze the ever decreasing amount of information leaked by these algorithms. While quantum computing seems many decades away from directly cracking crypto, we predict that 2025 will see a number of advancements in using AI to break traditional cryptography.

The Fragmented Internet

The open web, once heralded as a place for democracy and open information sharing, is rapidly changing. As global geopolitical tension increases, the web is at risk of becoming more fragmented than ever.

Prediction 5: Russia Disconnects

Russia has long been testing its ability to disconnect from the global internet through its "sovereign internet" initiative, which aims to create an independent network—Runet—that can operate without external connectivity. While the Kremlin cites national security and defense against cyberattacks as motivations, critics argue the move is a step toward greater censorship and information control. A full disconnection, though technically and economically challenging, could isolate Russian citizens and businesses from global services while strengthening the government’s grip on domestic narratives. However, it would also set a precedent for other nations to fragment the internet, threatening the open, interconnected nature of global communication.

Despite a potential disconnection, Russian based threat actors are unlikely to abandon their use of phishing scams, web hacks, and disinformation campaigns against the West. Instead, they could adapt by using overseas agents, proxy networks, and third-party collaborators to carry out these operations. A disconnected Russia could function as a “black box,” where cyber activities originating from within the country become harder to trace. This would complicate attribution, making it more challenging to impose sanctions or hold Russian actors accountable. Without clear digital links to Russian infrastructure, the West might have to rely on circumstantial evidence or geopolitical analysis, giving Moscow plausible deniability.

While Russia has been attempting to disconnect from the global internet for many years, they appear to be closer than ever, and 2025 could be the year they pull the plug.

Prediction 6: State Sponsored Hacking Competitions Withhold Vulnerabilities

Events like China’s Matrix Cup and Iran’s Cyber Olympics showcase the growing role of state-sponsored hacking competitions in the threat landscape.10 While hackathons are traditionally seen as positive initiatives that help uncover and patch vulnerabilities, the concern with state-backed events lies in their potential to conceal discoveries. The most recent Matrix Cup concluded with no results being displayed on the website and no claims of discovered vulnerabilities. This is consistent with the Chinese law which states that zero-day vulnerabilities must be disclosed only to the Chinese Government.11

Rather than responsibly disclosing vulnerabilities, participants in these competitions are likely to see their findings weaponized for cyber espionage, intellectual property theft, or infrastructure sabotage. These events cultivate highly skilled hackers who may be recruited by state actors to carry out sophisticated campaigns against adversaries.

The potential fallout is significant. By leveraging the zero-day vulnerabilities uncovered at these competitions, state-sponsored groups can develop more innovative and destructive attack methods. These include highly targeted intrusions, large-scale data breaches, and critical infrastructure disruptions that could destabilize entire regions. As these vulnerabilities remain unpatched and exploited in secret, the rapid advancement of state cyber capabilities outpaces global defensive measures, deepening the asymmetry between attackers and defenders.

Prediction 7: APTs Will Make Attacks Look Like They Come From Hacktivists

APT 28 (Fancy Bear), linked to Russia, has been known to leak stolen data through platforms designed to appear as independent whistleblowers or hacktivist entities, such as DCLeaks during the 2016 U.S. election. Iran’s Charming Kitten has also used social media personas and fake activist groups to distribute disinformation or mask espionage campaigns. These methods obscure state connections, making it harder for investigators to directly attribute attacks to nation-states.

Attributing cyberattacks to a specific APT or nation-state is often challenging, but when successful, a common response is to impose sanctions on the country linked to the attack. To mitigate the economic impact of such measures, more APTs are likely to adopt tactics designed to make their operations appear to originate from unskilled hacktivists rather than the highly trained operatives employed by intelligence agencies. By posing as grassroots actors, state-sponsored groups aim to confuse defenders, reduce the likelihood of decisive political responses, and maintain plausible deniability. This strategy further complicates the task of identifying state involvement, shifting blame toward independent or ideologically motivated individuals.

Prediction 8: Cloud Comes Home

By 2025, the rise of supply chain nationalism will likely prompt a fundamental rethinking of digital architectures, going beyond simple reshoring to encompass a broader shift in how businesses manage risk and resilience. As geopolitical tensions rise and new tariffs take effect, organizations will be forced to reconcile efficiency mandates with the growing need to localize their supply chains.12 This balance will create new classes of systemic risk, as companies attempt to "do more with less" and accelerate digital transformation while navigating stricter regulatory environments. A growing focus on reshoring will likely come with challenges, particularly in industries where key components cannot be quickly relocated or where strategic gaps in production may cause critical shortages.

At the same time, as governments push for efficiency and heightened self-reliance, the quality of supplier due diligence is likely to suffer. This may lead to increased third- and fourth-party risks, as businesses work with fewer vendors to reduce complexity and manage costs, often at the expense of thorough vetting. This is especially true in the context of sovereign cloud initiatives and geofencing efforts, which could create additional layers of complexity. With the acceleration of automation, AI adoption will also be a key tool for navigating these challenges, allowing organizations to consolidate platforms, reduce risk, and streamline processes. However, this push for efficiency and speed could further expose vulnerabilities in critical systems and complicate the governance of increasingly complex, localized supply chains.

Conclusion

AI is undeniably shaping our future, presenting both immense potential and significant risks. While it can serve as a tool for innovation and problem-solving, it also brings forth challenges that could lead to destruction or harm. Much like any tool, AI can be harnessed for both good and ill. Its increasing integration into complex systems adds layers of dependency and risk.

As organizations race to adopt their own AI solutions, a pressing question arises: how can AI-enhanced attacks be detected and blocked? The current reality is that such attacks, even when crafted by attacker-controlled AI, often resemble existing tools, techniques, and procedures (TTPs). These attacks typically employ known techniques, use residential proxy networks to obscure origins, and employ various methods to mimic human interactions with applications.

For now, these methods remain rooted in familiar tactics. However, as AI advances to uncover previously unknown vulnerabilities and develop novel exploits, attacks may become increasingly complex, potentially identifiable as AI-crafted due to their sophistication. Looking ahead, the majority of attacks may originate from attacker-controlled AI systems, evolving into a scenario where artificial general intelligence (AGI) could autonomously execute directives, carrying out attacks without human oversight. The implications of such a future demand proactive, forward-thinking defenses and robust AI governance.