We’ve all seen after-the-fact security camera footage of a wide variety of crimes splashed across social media and news sites. This visibility is a critical component of any judicial system, as it helps identify who did what and provides crucial, objective evidence of what actually happened.

Note that’s all past tense. Did. Happened. Past tense. The damage is already done. The visibility only provides an understanding of what occurred.

That’s a lot like logs. System logs are part of “big data”, and more specifically big “operational” data. This information is invaluable when tracking down a breach in security. Usually that breach has already happened, and we’re just frantically trying to figure out how, and from where, and why.

Even as we get better at “real-time” visibility, we’re still just observers, watching with horrified expressions when someone “gets inside” and malware spreads its Cthulu-like tentacles across the network to infect every machine it touches.

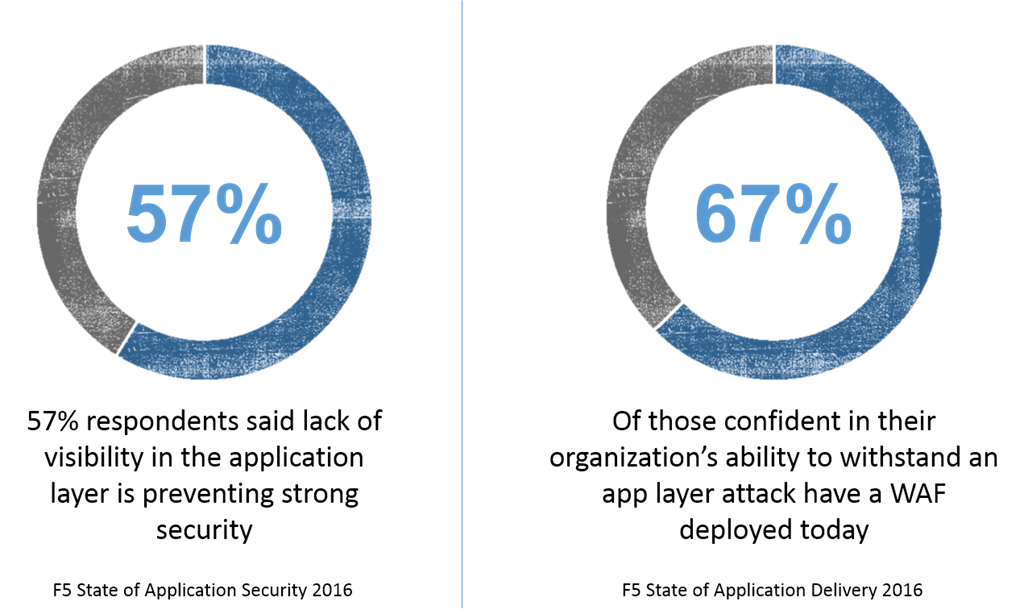

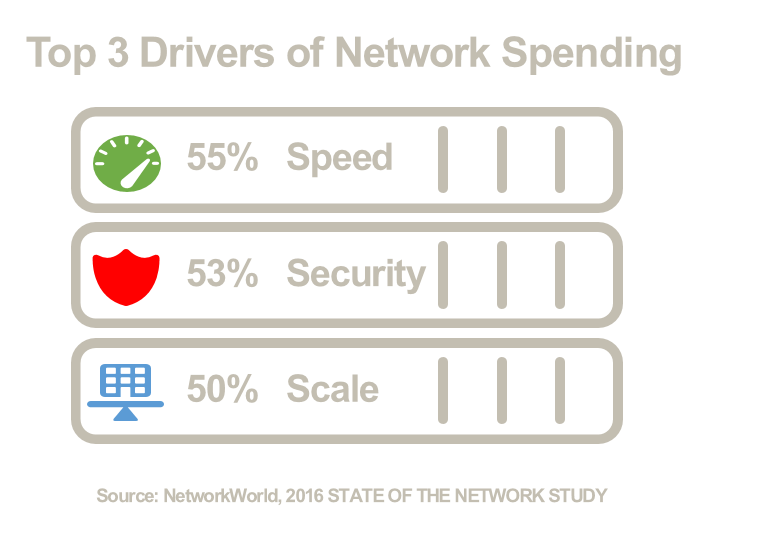

The problem is that real-time, actionable visibility – especially at the application layer where it’s increasingly critical – is often elusive to achieve. Even though we know it’s important to security efforts. To wit, in our State of Application Security 2016, the majority (57%) of respondents said a lack of visibility in the application layer is preventing strong security. That’s often because performance is impacted when security needs to climb up the OSI stack to the top, and improving application performance remains a top driver of networking investments. Ahead of security. These are not unreasonable objections, either.

There are compelling reasons why security professionals lack visibility into the application layer:

Traditional network security is oblivious to traffic above layer 4 (TCP). Parsing application layer (layer 7) traffic incurs latency and requires more horsepower (resources) than the standardized, network traffic such devices typically inspect. Thus, most devices are not capable of inspecting application traffic and providing the visibility necessary. They are still important devices, but we should not rely on them to provide visibility they are not intended to provide.

I/O (input/output) is one of the most resource intense functions a system can execute. The process of writing to disk, whether locally or to a remote system across the network, incurs latency and consumes resources. The nature of web applications is such that properly logging transactions with the depth and breadth of data needed to identify an attack requires time and resources that negatively impact both application experience and capacity. Thus, the visibility into the application layer is limited unless there is an active situation that warrants more detail.

Security often gets itself in the way of visibility. End-to-end encryption is great for privacy and security and enables organizations to check boxes on compliance audits, but the reality is that the use of SSL/TLS to secure HTTP-based application data obscures intermediate security solutions from inspection and evaluation. The use of encryption to secure messages blinds infrastructure to the content (malicious or not) and makes inspection (and therefore visibility) even more difficult to achieve. This is not to imply we should abandon encryption or security, but we do need to be conscious of the impact on visibility and architect solutions in such a way as to enable the visibility we need without incurring heavy performance penalties or increasing administrative overhead.

It would be poorly done of me to point out problems without offering solutions, or at least ideas, regarding how to improve visibility without destroying performance or incurring tons of architectural debt.

First, if you’re only relying on traditional network devices, stop. You need to seriously examine the capabilities of devices to inspect and act on traffic above layer 4, at the application layer (that’s layer 7). It is likely that one of the average 11 services our State of Application Delivery report says you have in place is capable of inspecting application layer traffic. It is quite often the case that it has been overlooked, or some other group (like infrastructure) is responsible for the service in question. Get to know your network architecture from end-to-end, and determine if existing services are capable of providing the visibility you need to avoid the headache of adding additional services to your architecture.

To address the issue of logging, it may be advisable to take advantage of mirroring capabilities in load balancing services. If you’re a networky kind of person, this is like spanning a port, only at the application layer. It’s also referred to as “cloning” traffic by application delivery experts. Basically, you use the capabilities of an application delivery platform to intelligently route application requests to multiple back-end servers. There are a variety of reasons such a pattern is implemented, including infrastructure patterns related to continuous deployment, like Blue Green and Dark Architecture migrations patterns. Rather than impede performance in the critical data path (between an active user and an application), a clone of the web request is simultaneously directed to another system where it can be fully logged without incurring performance penalties. Real-time analysis of those logs can also occur without dragging down the system, because users are not dependent on that system for responses. It’s just a clone, but it’s an accurate one. Programmability in the network further enables logging of responses in much the same way, and gives security professionals the visibility into the application layer they need to be proactive, rather than reactive.

Finally, there are solutions to the problem of encryption/decryption. Generally speaking, your primary load balancing service is deployed on an application delivery platform that can also “offload” or “terminate” SSL/TLS. This is a desirable capability in general for designing efficient delivery architectures, but becomes even more important when you need the ability to inspect encrypted inbound application traffic. By enabling this capability upstream of the back-end applications, at the point of distribution (the load balancing service), security professionals gain the real-time visibility into requests (and responses, for that matter) that enable proactive security policy enforcement that can prevent an attack from reaching deeper into the application infrastructure. The ability to decrypt and inspect is critical to enabling the visibility security professionals need to confidently protect applications and their data from attacks. These platforms are often designed with security and performance in mind, meaning they are optimized to decrypt and inspect traffic with minimal latency, thus mitigating the impact to the overall application experience.

Visibility doesn’t have to disrupt performance if it is baked into the data center architecture. Network architectures must adapt to the changing threat landscape by including services capable of providing the real-time visibility into the application layers that security and development professionals need to protect and defend applications from exploitation and attack.