Organizations constantly struggle between embracing technological changes to open up new business opportunities and protecting the business from new challenges and risks. In this paper, we’ll examine containerization and how the adoption of this technology in F5 products affects IT professionals, architects, and business decision makers.

In the last 20 years, virtualization has transformed server computing, enabling multiple separate operating systems to run on one hardware platform. A more modern approach is containerization (or operating system virtualization), which enables multiple apps to run on a single instance of a host operating system. Each app instance then shares the binaries and libraries installed into the single operating system instance. Figure 1 compares these approaches.

Unlike virtual machines (VMs), containers can share the same operating system, virtualizing at the OS level, rather than the hypervisor level.

Not surprisingly, developers like containers because they make developing and deploying applications and spinning up microservices quick and easy. Containerization also enables greater portability, as apps in containers are easier to deploy to different operating systems, hardware platforms, and cloud services. Containers use up fewer resources than applications running directly on bare metal-hosted operating systems or on virtual machines.

Generally, most of the discussion (and documentation) about containers focuses on the programming or DevOps perspective. As an IT professional, system architect, or business decision maker, while you appreciate the flexibility that containerization can bring, you may have genuine concerns about how this change will affect administration, management, and monitoring of apps and services within your environment. You also want to ensure that containerization does not adversely affect network and app security.

In an increasingly competitive market, modern IT systems must deliver realizable business benefits. To deliver on this requirement, it is vital that IT departments can grow their application capital, designing and creating architectures which feature not just more apps, but apps with greater functionality, enhanced usability, and easier integration.

The challenge for IT directors is that their departments must deliver greater functionality without enlarging headcount. Improvements to productivity now come, not from hiring more people, but from advances in technology and automation that enable increasing the number of apps available to customers, managed by the same-sized team.

Ease of container deployment presents a further challenge. Developers need education and guidance to ensure that they consider both scale and security when creating applications and IT professionals can help them achieve those outputs. Here, you need to think about the impact on production from container platforms such as Kubernetes and Docker, and how the need to be agile does not result in undesirable outcomes from poor security practice or failure to consider production loads.

Kubernetes is an open-source platform for managing containerized services and workloads. Kubernetes itself originated as Google’s container scheduling and orchestration system that the company then donated to the Cloud Native Computing Foundation, making the source code open to everyone. Docker provides a similar type of open source architecture and distribution, consisting of a suite of coupled Software as a Service (SaaS) and Platform as a Service (PaaS).

A key factor in delivering realizable business benefits is minimizing time to value. Increasingly, factors such as performance and latency take a back seat with respect to ease of insertion. In consequence, if you have two components, both of which have nearly identical functionality, but one has higher performance whereas the other is easier to integrate, organizations tend to choose the component that is easier to integrate with the current environment. As environmental complexity rises, this bias is likely to continue.

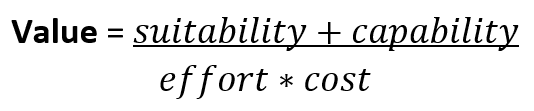

When looking to implement a technology such as containerization, you need some way of measuring the value that this new approach brings. The factors that affect value would typically include suitability, capability, effort, and cost. The following equation gives an example of how to assign relative value based on those factors.

The units that you use in the equation are irrelevant; what is important is that they are the same for each item that you evaluate.

This equation highlights that technology must be seamless to integrate and that familiarity (that is, ease of insertion) can be more important than best-in-class features or performance. In consequence, deployment effort has a balancing effect when it comes to overall value calculations, which can result in less capable products being more appealing to customers due to their ease of insertion.

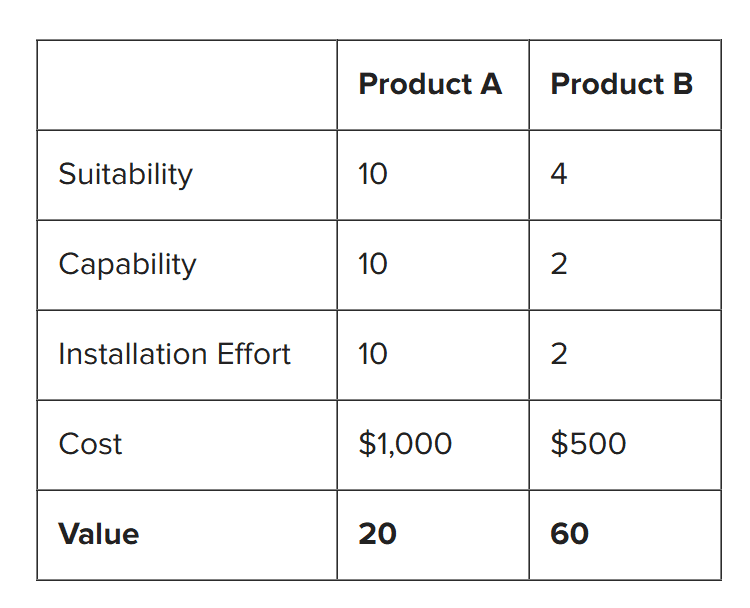

An example of this effect might be with two competing monitoring solutions. Product A has fantastic specifications, with best-in-class logging and reporting. It incorporates military-grade security and is highly manageable. Unfortunately, its proprietary command-line interface, poor support for open standards, and lack of integration features make it difficult to install. It is also more expensive than most other products on the market.

In comparison, Product B has nowhere near the capabilities of its rival, albeit still providing acceptable levels of monitoring and security. However, it scores over the competing product when it comes to both cost and ease of installation. Tabulating the scores for each product produces the table below, with marks awarded from 1 to 10 for suitability, capability, and installation effort, with 1 as the lowest and 10 as the highest.

Plugging these figures into the equation above produces the value score listed in the bottom row of the table.

You can see how the less suitable and capable product that is five times easier to install at half the price delivers an estimated three times the value compared to its best-of-breed rival. Many organizations understand these considerations and are increasingly using ease of installation and integration as a key factor when adopting and deploying new technologies. Container-based approaches can significantly reduce deployment effort and control costs while also maintaining both suitability and capability.

With apps increasingly driving business value, containerization provides a logical solution to the challenges of DevOps-centric IT implementation practices. Containers provide isolation from the platform on which they run and from each other without the management overhead of running virtual machines. Developers can easily spin up app environments that contain all the binaries and libraries that they need, with the required networking and management facilities built in. Containerization also simplifies application testing and deployment.

Container-based environments provide greater resource utilization than virtual computers by massively scaling up the number of application instances that can run on a server. Without the overhead of both host and guest operating systems, container environments make more efficient use of processor time and memory space by sharing the operating system kernel. Containerization also gives more architectural flexibility, as the underlying platform can be either a virtual machine or a physical server. Red Hat Product Strategy1 indicates that nearly half of Kubernetes customers deploying apps into containers in the future will run the container engine directly on bare metal.

Containerization provides the next advancement in the road from hardware-based computing to virtualization to multi-cloud, enabling up to 100% automation, sub-second service creation times, and service lifetimes that can be measured in seconds. It is this immediate provisioning and deprovisioning of services and microservices within a modern IT architecture that container environments such as Docker, OpenShift, and Kubernetes enable.

Importantly, containerization delivers a key benefit that is vital in today’s multi-platform and multi-cloud world—the ability to port apps from on-premises to cloud, to another cloud vendor, and back to on-premises again, all without changes to the underlying code. With interoperability being a key factor underpinning architectural decisions, this portability enables IT departments to achieve key milestones for app services deployments and supports DevOps teams in meeting organizational goals. A recent IBM report2 highlights how the use of containers for app deployments also enables companies to improve app quality, reduce defects, and minimize application downtime and associated costs.

The same report also lists applications that are uniquely suited for container-based environments, which include data analytics, web services, and databases. Here, the key factor is performance, but other relevant factors to consider are whether the applications are likely to run across multiple environments and if they use microservices to support multiple DevOps teams working in parallel. With containers, you can address performance issues by simply spinning up more instances of an app—assuming you implement a stateless architecture design and provide app services at scale.

Containers are not entirely without deployment challenges and the still-young technology has not yet reached the level of maturity of virtualization. The following section highlights some of the challenges that containers bring.

A vital design criterion with containerization is a balance between the three vectors of performance, density, and reliability, as shown in Figure 2.

The F5 approach is to focus on containerization at the component-level rather than at the system-level. This approach brings several advantages, which include:

Component-level containerization enables us to achieve that three-way balance through disaggregation, scheduling, and orchestration. F5 products that implement containers will then use these features to maximize the benefits of containerization.

To support organizations as they adopt container-based technologies, F5 offers F5® Container Ingress Services, an open-source container integration. Container Ingress Services provides automation, orchestration, and networking services such as routing, SSL offload, HTTP routing, and robust security. Importantly, it integrates our range of BIG-IP services with native container environments, such as Kubernetes and Red Hat OpenShift.

Architecturally, Container Ingress Services occupies the following position in relation to your containerized apps, adding front-door Ingress controller services and enabling visibility and analytics through BIG-IP.

Container Ingress Services addresses two of the key issues identified earlier in this paper—scalability and security. You can scale apps to meet container workloads and protect container data by enabling security services. With the BIG-IP platform integration, you can implement self-service app performance within your orchestration environment. Using Container Ingress Services also reduces the likelihood of developers implementing Kubernetes containers with default settings by using Helm charts for template-based deployments and upgrades.

In addition, Container Ingress Services enables security with advanced container application attack protection and access control services. For app developers and DevOps professionals who are not familiar with configurations, F5 application services offer out-of-the-box policies and role-based access control (RBAC) with enterprise-grade support, in addition to DevCentral customer and open source communities.

The challenges associated with native Kubernetes are related to developing and scaling modern container apps without increased complexity. There may be constraints on performance and reliability, and services that provide a front-door to Kubernetes containers may be inconsistently implemented, requiring greater integration effort to deploy and configure.

The solution to these challenges is NGINX Kubernetes Ingress Controller, which enhances the basic Kubernetes environment by providing delivery services for Kubernetes apps. Typically, Kubernetes pods can only communicate with other pods in the same cluster. Figure 4 shows the Kubernetes Ingress Controller providing access from the external network to Kubernetes pods, governed using Ingress resource rules that control factors such as the Universal Resource Identifier (URI) path, backing service name, and other configuration information. The available services depend on whether you use NGINX or NGINX Plus.

Enterprises using NGINX gain features such as load balancing, SSL or TLS encryption termination, URI rewriting, and upstream SSL or TLS encryption. NGINX Plus brings additional capabilities, such as session persistence for stateful applications and JSON Web Token (JWT) API authentication.

As organizations adopt microservices to unlock developer agility and cloud-native scalability, they encounter a learning curve while organizational capabilities catch up to the technology. These organizations want to quickly unlock the benefits of microservices while preserving the stability that underpins their customer experience.

Aspen Mesh is a fully supported version of the open source Istio service mesh that helps companies adopt microservices and Kubernetes at scale and gain observability, control and security of microservice architectures. Aspen Mesh adds key service mesh enterprise features, including a user interface that makes it easy to visualize and understand the status and health of your services, fine-grained RBAC, and policy and configuration capabilities that make it easier to drive a desired behavior in your containerized applications.

Aspen Mesh provides a Kubernetes-native implementation, which is deployed in your Kubernetes cluster and can run on your private or public cloud, or on-premises. Importantly, it can work cooperatively with an F5-based ingress mechanism, using Container Ingress Services to provision deeper Layer 7 capabilities.

Architecturally, Aspen Mesh leverages a sidecar proxy model to add levels of functionality and security to containerized applications, as seen in Figure 5.

F5 is at the forefront of container adoption, providing organizations with the ability to run reliable, secure, and high-performing containerized application environments that scale application services in both simple and massive, industry-specific workloads. Container technology will be a key enabler in future application development and deployment environments, such as serverless implementations, service mesh architectures, and mobile edge security. As a company, F5 uses both its own development research and commercial implementations of containers to drive and develop apps and services that will make a difference to your business.

As with virtualizing existing products, we incorporate container technology in ways that may not be immediately obvious to the administrator, but will be entirely transparent to the end user. As a customer, you will see increased performance, flexibility, and security. As we seamlessly implement these new apps and services, you can concentrate on ensuring that you have the functionality that you need.

We are also working to adopt the best open source solutions into our products, extending the services that these open source platforms provide and enhancing the capabilities of our container environments. Our goal is always to create products that are easier to deploy, quicker to integrate into your existing networks, more reliable, and more secure.

To execute on our container adoption strategy, we are focusing on the following approaches:

F5 is committed to a future that sees containers as a foundational delivery mechanism for many future app services technologies. These technologies will include serverless, service mesh, and mobile edge, but may include other approaches currently under development or not yet envisioned. We are redesigning our product range to build containers into our solutions without affecting the important aspects of effective network management.

Just as with virtualization, containers themselves will become more aware of the underlying capabilities of the hardware on which the container engine runs. Multi-threading, memory speeds, storage access, and networking will be increasingly transparent to the apps running in each container, thereby further enhancing resource usage.

We do not see containers as a replacement for virtualization, but as complimentary to it. In consequence, our expectation is that containers will have a lifetime at least equal to that of virtualization and that co-existence will continue. Finally, we expect further levels of abstraction to appear that will co-exist alongside both virtualization and containers.

We appreciate that a key concern for customers will always be security. In consequence, F5 continues to use its experience of hardware and software appliances, firewalls, and networking to provide the tightest possible security on all its products.

In summary, in addition to our already-existing container-related technology, F5 plans to continue incorporating containers into the F5 product range, providing you with more integration options, greater reliability, improved performance, and reduced deployment time with updated and future apps.

For more information about F5 and container support for apps, visit f5.com/solutions/bridge-f5-with-container-environments

1 The Rise of Bare-Metal Kubernetes Servers, Container Journal, Mike Vizard, 2019

2 The State of Container-based App Development, IBM Cloud Report, 2018

3 Misconfigured and Exposed: Container Services, Palo Alto Networks, Nathaniel Quist, 2019

PUBLISHED JUNE 23, 2022