In Part One of this series, we introduced fake account creation bots and why people create fake accounts, and in Part Two we covered why automation is used to create fake accounts and how fake accounts negatively impact businesses. In this article, we are going to focus on how to identify fake bot accounts. We do not go into how to identify all kinds of fake accounts but focus specifically on the identification of fake accounts created and controlled by bots. These are fake accounts being made at scale and hence leave behind trails that make them easier to identify than a single fake account manually created by an individual.

How to Identify Fake Bot Accounts

There are different kinds of fake accounts created by threat actors with different levels of sophistication. As a result, there isn’t one way to identify fake accounts and this article does not attempt to be a comprehensive guide on fake account identification. We however will give some insights into some common methods that can be used to identify fake accounts. It is important to note that these approaches may not be able to identify the most sophisticated fake accounts from advanced actors.

Username Pattern Commonalities

Fake accounts are typically created in an automated fashion and in large numbers which creates some patterns in the account names that allow them to be identified and linked together. The kinds of patterns in the fake account names depend on the level of sophistication of the attacker. Below are five different methods used by attackers to create fake accounts. Understanding these approaches will help you know how to identify these fake accounts.

Numbered Accounts

User accounts that have the same root username and domain name, but the usernames are numbered, and incremented. For example, “fakeaccount1@gmail,com”, “fakeaccount2@gmail.com”, ..., “fakeaccount18765@gmail.com”. This is the simplest form of fake account names, used by the least sophisticated attackers. This approach has several shortcomings including the fact that it is easy to identify these accounts as potential fake accounts, as well as the fact that once you identify one of the fake accounts you can easily identify and delete all associated accounts. As a result, more sophisticated attackers will use more complex approaches.

Random Username Generation

Another common approach used by attackers, slightly more sophisticated than the first, is to randomly generate alphanumeric usernames that are not meaningful to humans. This is done using a simple randomizer with a fixed or variable text length. Less sophisticated actors tend to have fixed length usernames while more sophisticated actors can also randomize the length of the usernames. Examples of this sort of account creation include addresses such as “PWBNhe7Ywu@qq.com”, ”xYrjgJgWL2@qq.com”, “4BC3idTCCc@qq.com” with a fixed length randomization, and “4BRh8aEHN8@qq.com”,”vGccVigQpHr9ZKc@qq.com”, “FGyCqdmpLJtJWC64JQZv@qq.com” with a variable length randomization.

These kinds of usernames are hard to identify at scale as you cannot easily use regular expressions to identify them, especially if they use a common domain name making this approach superior to the simple numbered accounts mentioned above. The chances of these accounts already existing in email and other systems that attackers are targeting is also low, with little risk of collisions for these usernames. The accounts do, however, look suspicious to visual inspection, which is one of the shortcomings of this approach.

Use of the Same Format

A more sophisticated approach is to use a standard username format. This is not simply having the same base username an incrementing a number, as in the first approach described above. This approach will also change the root while maintaining the same format.

For example, an attacker might select a format of “firstname.lastname.2digityear@domain” and generate addresses such as “john.doe.89@yahoo.com”, “peter.sullivan76@gmail.com”, or “mary.childs03@gmail.com”

Or the attacker might select a format of “initial+lastname+4digitDOB@domain” and generate accounts like “jdoe1986@yahoo.com”, “psullivan1976@gmail.com”, or “mchilds2003@gmail.com”

To generate these accounts, attackers will typically acquire lists of PII or spilled credentials, or simply scrape social media for names and dates of birth and create the seed list used to generate and create these email addresses. Attackers can also use dictionaries of names or words in combination with randomly generated dates of birth (DOBs) to generate similar kinds of accounts. This provides randomness in the account names which makes them harder to spot and makes it difficult, even if a subset of the accounts are identified, for security teams to find the rest of the accounts. Trying to delete all accounts with the same format, e.g. in the examples 1 and 2 above, will result in an unacceptable amount of false positives as many real users tend to follow the same username patterns.

Username Fuzzing

This is another common approach used by sophisticated attackers to create large numbers of fake accounts. This approach is based on the difference between how email providers and the other companies where fake accounts are being created interpret special characters in email addresses. Specifically, most email systems like Gmail do not actually consider periods in an email address as being part of the email address, while most other systems in the world do. This leads to a case where a single Gmail account can be used to create hundreds and potentially thousands of fake accounts.

As an example, the Gmail address: “johndoesoap@gmail.com” can be used to create the following fake accounts on almost any system:

Fake accounts: “johndoesoap@gmail.com”, “john.doesoap@gmail.com”, “johndoe.soap@gmail.com”, “john.doe,soap@gmail.com”, “j.ohndoesoap@gmail.com”, “jo.hndoesoap@gmail.com”, “joh.ndoesoap@gmail.com”, “john.doesoap@gmail.com”, “johnd.oesoap@gmail.com”, “j.o.h.n.d.o.e.s.o.a.p@gmail.com” etc.

While there are a large number of valid combinations, all verification and notification emails for all these fake accounts will all be sent to the same Gmail email address “johndoesoap@gmail.com”. This reduces the difficulty attackers would face in trying to create thousands of Gmail accounts and circumvent the controls that Google has in place to prevent this. They can simply create a single account manually which is easy to do, then use automation to fuzz that one email address and create potentially thousands of fake accounts on other platforms.

It is important to note that periods are not the only special characters that can be used to achieve username fuzzing. “+” among other characters can also be used instead of, or in combination with periods to achieve the same result.

Stolen Email Addresses

A more sophisticated approach used by attackers may be to use real compromised user email addresses to create fake accounts. Email addresses of real users with good email domains are hacked through phishing, malware, credential stuffing and brute force attacks. These accounts are then taken over and passwords changed to lock out the original owners. Emails can then be used for nefarious purposes including fake account creation. These accounts are much harder to identify and cannot be tied together from username pattern analysis. These accounts also tend to have a wide range of email domains which also adds to the difficulty of identification. This approach is more expensive as the compromised email addresses must be purchased for a price or costs incurred to take over and compromise them.

Some attackers will use a combination of the approaches above to have a wide range of different username formats which makes it much harder to identify all the accounts associated with the fake account bot.

Account Creation Timing

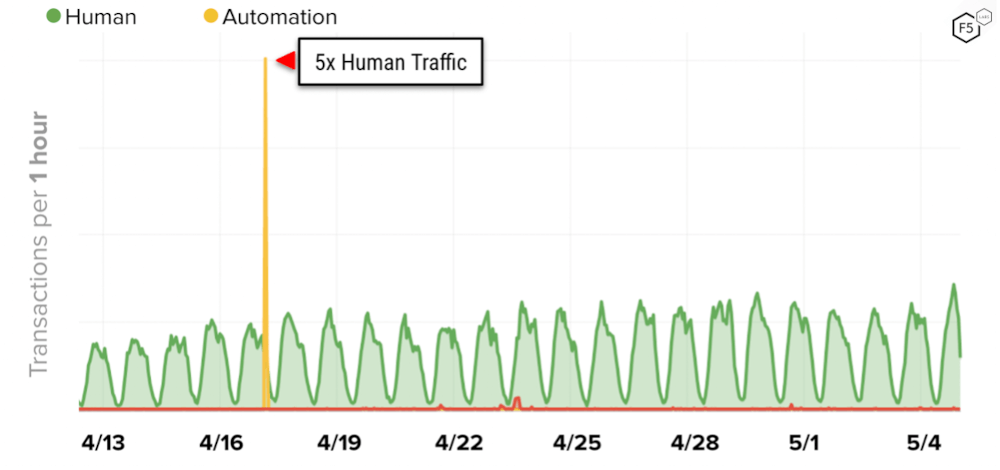

Many fake accounts are created by unsophisticated actors who will attempt to create a large number of accounts on a system in a very short period of time, typically hundreds of accounts per minute. This traffic is very easy to spot as even large websites do not have this number of new accounts being created every minute. Spikes in new account creation should therefore be looked at with suspicion unless tied to some marketing push or promotion for users to create new accounts. Below is an example of a spike in new account creation activity being done by a fake account creation bot.

Figure 1: Graph of account creation activity over time showing spike due to fake account creation

More sophisticated actors will spread the creation of fake accounts over a longer period of time. This makes this activity harder to detect and allows them to age accounts before they use the accounts for nefarious means. In many cases, the rate at which the accounts are created will be constant as it is controlled programmatically. Thus, activity where a new account is created every n minutes is just as suspicious as activity where lots of accounts are created in a single minute. The most sophisticated actors will fully randomize the timing of the new account creations, but most actors do not exhibit this level of sophistication.

Obscure and Low Reputation Email Domains

The limiting factor in the creation of fake accounts is having access to email addresses. Most systems require confirmation emails to be received to confirm email addresses before accounts can be created. This is not universal, and some systems allow accounts to be created without ever confirming email addresses.

More reputable email providers have security controls to prevent their email platforms from being used by bots for spamming, phishing, and other nefarious purposes. These controls make it harder to create large numbers of email addresses for use in fake account creation schemes. Other email providers do not have the same controls, and it is easy for bots to create large numbers of email addresses on these platforms. As a result, email addresses from these domains tend to be seen in a lot of fake account creation attacks.

Obscure email domains are also indicative of potential fake accounts. Most users will have email addresses from a handful of the top email providers like Gmail and Yahoo. The commonly expected email domains will vary by the regions of the typical legitimate users of the site, as there are email systems more common in some parts of the world than others. For example, qq.com is a popular email domain in China but less common in the Americas and Europe. Seeing a handful of accounts from obscure domains is not unusual. However, seeing large numbers of new accounts from these obscure domains is likely indicative of fake account creation.

There are also low reputation email providers including those offering throw away or disposable email addresses.

Low reputation email providers either do not have security guardrails to prevent abuse of their email service by spammers and criminals or they intentionally avoid implementing such controls to attract these kinds of users. As a result, those email domains end up being abused and added to deny lists by many internet and email security providers. Examples of low reputation email domains include mail[.]ru, 126[.]com, sohu[.]com and sina[.]com, among a very long and ever-growing list.

Disposable email providers provide temporary email addresses that can be used just once, or for a limited period of time. There are some legitimate use cases for these kinds of email addresses among privacy focused users, but these kinds of email addresses lend themselves perfectly for spamming and criminal use cases as well. As a result, such email domains should be looked upon with suspicion. Examples of disposable email providers include Mailinator, e4ward, Startmail, EmailOnDeck, Internxt, Temp Mail, Burner Mail, Guerrilla Mail and Fake mail. These providers have a large number of domains from which they can generate new email addresses.

More comprehensive lists of these email domains and providers can be obtained online from numerous free and paid sources.

Account Access Patterns

Fake accounts are typically accessed in a coordinated fashion. The bot will log into all the fake accounts at the same or similar times. By looking at account access logs, one can identify spikes in authentication activity where large numbers of accounts are accessed at the same time. This is usually effective in identifying less sophisticated fake account bots or on large websites where attackers do not believe their coordinated activity will be visible in the mass of other legitimate user traffic.

Behavioral Similarities

Fake accounts, depending on their purpose, are typically utilized in a coordinated fashion. For example, disinformation fake account bots will all simultaneously post, like, share and promote the same or similar pieces of content. This coordinated timing and activities can be used to identify related fake accounts that are part of the disinformation bot network. Fake accounts can also be seen retweeting the same content, following the same accounts, reviewing the same products or products by the same seller etc. These similarities can bring attention to fake account bot activity.

Sharing of Passwords

Some fake account bots are lazy and do not want the overhead of managing a large list of credentials for their large number of fake accounts. Hence, they simply use the exact same password of all their fake accounts. Looking for similarities in passwords in your system (hopefully encrypted) will reveal potential fake accounts that are controlled by the same actor. It is possible for small numbers for unrelated users to have the same password. However, given stricter password requirements by websites, it is highly unlikely to see more than a handful of accidental password collisions. Any large-scale collisions of passwords are more likely to be accounts controlled by the same entity and are probably fake accounts.

Profile Analysis

Analyzing user account profiles is a great way to identify fake accounts. There are numerous ways this technique can be used. For starters, many fake accounts created by unsophisticated bots tend to have incomplete or inconsistent information. For example, accounts may not have a profile picture, or the profile picture may be of low quality or of a non-human subject. These accounts also tend to miss a lot of important profile information and only complete the bare minimum required fields needed to successfully create an account.

For accounts that require a lot of personal verifiable information like addresses, phone numbers and credit cards, similarities in the personal verifiable information are also indicative of fake accounts. The chances that a thousand users all live at the same address, have the same shipping address, phone number or credit card details are very low. Security teams and systems designers can validate this information upfront to make it harder for attackers to create fake accounts in their systems.

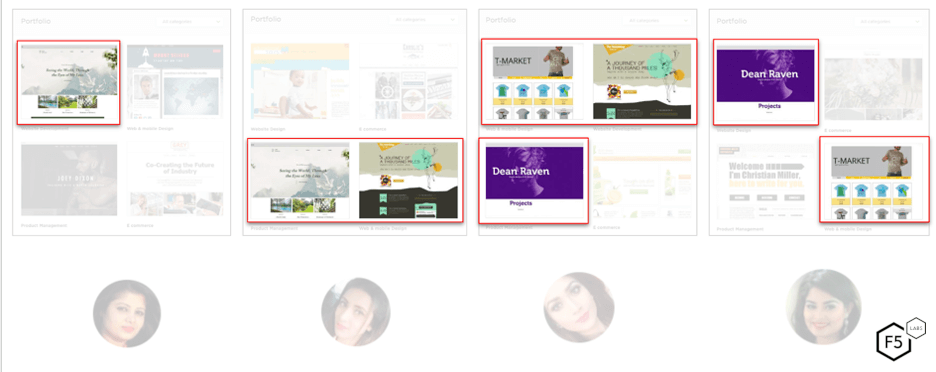

Even for data that is not personally verifiable, similarities in profiles can reveal a lot about potential fake accounts. Figure 2 below is an example of four freelance web designer profiles on a popular freelancing website.

Figure 2: Fake web designer accounts from a freelance website

The similarities in the profile pictures as well as the portfolio of websites they have designed in the past give hints to the fact that these are all fake accounts created and controlled by the same actors. The usernames and profile names of these four accounts (withheld) also show striking similarities. All the usernames follow the same patterns as highlighted in #1 above and all the profile names are first names and the initial “M” across hundreds of similar accounts. The skillset and rates charged by each of these accounts was also identical as was a large chunk of the profile bios. Also interesting were similarities in reviews. The accounts had reviews from the same list of users. Unsurprisingly all posted stellar 5-star reviews that read almost identically with some slight variations. Profile analysis is therefore a powerful tool to identify fake accounts, though with the rise of generative AI, it is becoming easier for fake account bots to generate thousands of unique profiles including profile pictures and portfolios of content.

Behavioral Analysis

Fake accounts tend to operate in a coordinated fashion and exhibit similar behaviors. The level of coordination will depend on the objective of the fake account bot creator. Not all fake account bots will need to act simultaneously, even if some level of coordination may still be involved. When conducting behavioral analysis, you are looking at behaviors at two distinct levels:

Individual Behavioral Analysis

This involves looking at the behavior of an individual account and identifying anomalous activity that is not consistent with real users. This includes things like superhuman speed, such as sending out 100 friend requests or posts a minute. This is not something physically possible for a real person to do. It may also include the use of uncommon user agent strings like CURL or python, the use of headless browsers, as well as automation tools like Postman and Selenium. This behavior is not common for real users and is also indicative of bots trying to create fake accounts.

Cluster Behavioral Analysis

This involves looking for coordinated behavior across many accounts. It is not typical for large numbers of accounts to work in concert and exhibit the same behaviors at the same time. This includes behavior such as:

- Liking, following, and friending each other at the same time.

- Promoting and sharing the same content in a coordinated fashion.

- Posting of negative comments or disliking the same or similar content.

- Trying to purchase the same limited inventory products at the same time.

- Attempting to redeem or purchase large numbers of gift cards at the same time.

- Coordinated activity at unusual times of the day, which may be indicative of coordinated actors in foreign countries that operate in a different time zone than legitimate customers.

Network and Device Similarities

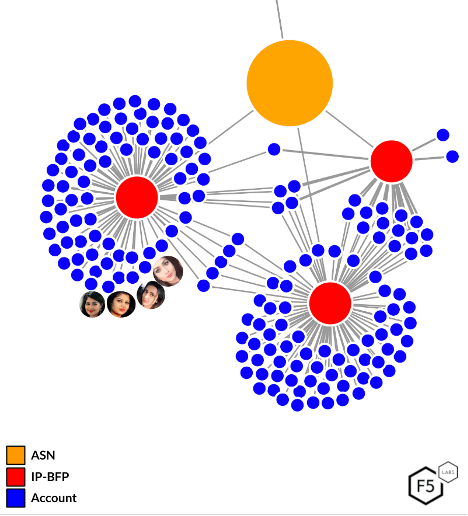

Fake accounts are typically created and accessed/controlled from the same distributed bot infrastructure. Similarities in infrastructure that is accessing many accounts can also point to other potential fake accounts that are controlled the same entity. Analyzing logs and device and browser fingerprinting data can bring attention to entities that are accessing a large number of accounts with exceptionally high login success rates (>90%). This is typically indicative either of aggregator or fake account bot behavior. The industry the activity is targeted toward can narrow down which of the two a given activity is likely to be. Figure 3 below gives an example of the fake accounts on the freelance website in Figure 2 above. Figure 3 is a network cluster diagram showing the relationship between the infrastructure used to access a given set of accounts including the four fake accounts from Figure 2.

Figure 3: Network cluster diagram showing devices and networks accessing fake accounts

The orange bubble represents the ASN or internet service provider of the IP addresses used to access these accounts. The red bubbles are a combination of browser/device fingerprints and IP addresses used to access these accounts. The blue bubbles are individual accounts on the freelancing website. This shows that tens of accounts are being controlled and accessed by the same device and network infrastructure. It also shows that the three unique devices are all connected as they share access to sets of accounts. This allows us to isolate these fake accounts with a high level of confidence. The combination of browser/device fingerprint and IP address has less collisions than using the IP address alone, since IPs can be shared among a large number of users in a way that browser/device fingerprint cannot.

Conclusion

Though there is no foolproof way to identify fake accounts, this article covered some of the main ways to identify fake accounts. Because fake accounts must be created and controlled in large numbers via automation, this leaves behind a trail of breadcrumbs that make it possible to identify them. This can be done through a variety of methods like simple username pattern and domain analysis, profile and behavioral analysis, as well as network and device similarities. These are powerful methods whose efficacy depends on the level of sophistication, as well as the type and objective of the fake account bots. Less sophisticated bots create accounts that are easier to identify, while more sophisticated bots will create and operate accounts in a way that is harder to identify and requires more sophisticated analysis.

Recommendations

The identification of fake accounts, and the infrastructure that controls them, is a complex topic, but the first step would be developing the means of gathering relevant data for analysis, and then using the above techniques to begin the process.