While movies and media fuel the fear of AI superintelligence taking over the world, the real challenge faced by enterprises today is less cinematic but far more urgent: the tangled web of hybrid multicloud environments, APIs, and operational silos that—in the next few years—will potentially enable the deployment of society-changing AI advancements.

Superintelligent AI programs like Skynet from Terminator or HAL from 2001: A Space Odyssey make for compelling storytelling, but the actual nightmare enterprises are up against isn’t rogue machines—it’s figuring out how to deploy, secure, and manage the explosion of AI-powered apps and APIs that organizations require for innovation. Compounding this challenge is the need for enterprises to manage this new fleet of AI applications while continuing to operate their existing portfolio of distributed applications that keep the business running.

“How do you harness intelligence in a system that’s already riddled with inefficiencies and technical debt? By consolidating app delivery and security services into a single, extensible platform.”

At F5, we call this expanding complexity the “Ball of Fire.” Managing a skyrocketing number of apps and APIs across increasingly distributed environments is already a huge operational challenge—but this Ball of Fire is about to burn a whole lot hotter with the explosion of AI.

Managing a skyrocketing number of apps and APIs across increasingly distributed environments is causing a huge operational change that F5 calls the “Ball of Fire.”

AI’s exponential growth is fueling anxiety, but not how you might think

During the past few years, the investment in AI has reached unprecedented levels with adoption curves climbing faster than anything we’ve seen in recent history. Governments are remaking entire power grids and modernizing regulations for industries, such as nuclear energy, to fuel the next wave of machine intelligence. It’s a race to harness AI’s transformative potential across enterprise ecosystems.

Enterprises rely on applications to deploy AI, which means a flood of additional workflows, APIs, and new dependencies within hybrid multicloud environments that are difficult to manage and secure in a uniform way. It’s here that the real threat lies. AI adoption doesn’t just add capabilities—it expands operational sprawl to levels many enterprises are ill-equipped to handle. Consider the challenges encountered by enterprises deploying a broad range of AI tools:

- Generative AI models connecting to APIs that interface with third-party datasets

- Agentic AI workflows managing entire SaaS-like ecosystems, such as marketing automation or customer personalization

- Real-time inference and model training pipelines operating across cloud, edge, and on-premises environments

Managing and securing this level of operational complexity amid the already significant challenges of fragmented environments creates a logistical nightmare. It’s not just about adding more applications; it’s about ensuring the entire patchwork ecosystem functions securely, consistently, and at scale. Enterprises are scrambling to tame this chaos even before they fully integrate AI at the levels they’re envisioning.

Enterprises must focus on solving the problem of AI deployment at scale. How do you harness intelligence in a system that’s already riddled with inefficiencies and technical debt? By consolidating app delivery and security services into a single, extensible platform.

Harnessing AI requires converged, unified platforms

Taming operational sprawl while scaling AI effectively requires more than tactical point solutions. Enterprises need a unified approach, which includes a platform capable of addressing the full spectrum of challenges—spanning deployment, management, security, and performance.

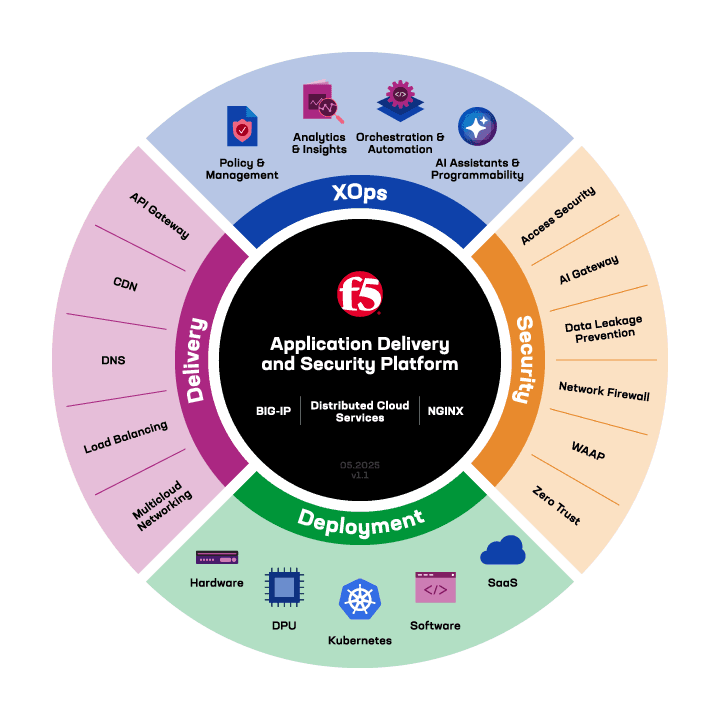

Successful platforms must drive value across four critical pillars to effectively manage and secure AI in enterprise environments:

Centralized management across every environment

Why it’s critical: Siloed environments create inefficiencies because they often reinvent the wheel for every deployment and environment, with IT teams juggling redundant tools and inconsistent policies across cloud regions, on-premises installations, and edge deployments. These inefficiencies delay scaling efforts and leave enterprises exposed to risks like misaligned security configurations or compliance gaps. Agile centralized management allows development and security to benefit the organization without getting in each other’s way. It also ensures enterprise-wide governance and compliance are consistently enforced. For instance, an organization bound by strict regulations like General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), or the California Consumer Privacy Act (CCPA) can implement automated security updates—such as encryption policies or audit trails—across all environments from a single control plane. This reduces manual errors, simplifies compliance reporting, and aligns security protocols with regulatory requirements.

Practical example: A financial institution managing generative AI applications across a hybrid multicloud environment uses centralized control to enforce consistent security policies and ensure compliance with data protection regulations like GDPR. This approach reduces manual configuration errors, eliminates redundancies, and safeguards sensitive customer account details while minimizing downtime.

AI workload security at scale

Why it’s critical: AI interactions often involve sensitive and dynamic data pipelines that must be secured in real time without compromising performance. This is particularly important for AI inference endpoints and model training pipelines, which are vulnerable to attacks such as prompt injection, data poisoning, and model theft.

Practical example: A healthcare organization using AI-powered diagnostics relies on comprehensive API discovery and encryption to protect sensitive patient data. Automated threat detection not only identifies anomalies in real-time traffic but also mitigates attacks before they impact operational performance. This level of proactive security empowers the enterprise to deliver high-performing AI services while safeguarding sensitive data.

Global resilience and visibility

Why it’s critical: Expanding environments and increasing interconnectivity make it difficult to troubleshoot incidents and spot vulnerable APIs. Without unified observability, enterprises risk managing an ecosystem where blind spots compromise both performance and compliance efforts. Global resilience is essential for identifying bottlenecks and fixing vulnerabilities in real time to maintain operational health.

Practical example: A global e-commerce enterprise leverages centralized visibility tools to ensure compliance with European data privacy laws while managing high API activity during North American shopping events like Black Friday. By proactively monitoring and responding to anomalies, the business ensures uptime and maintains customer trust in every region.

Programmability for innovation

Why it’s critical: To avoid drowning in maintenance tasks, enterprises must integrate lifecycle automation that adapts to the shifting demands of AI as well as legacy workloads. Programmability ensures dynamic adaptation to evolving workloads, a critical requirement not only for the parts of an organization deploying AI models, but for all areas of the business that handle fluctuating traffic or compute demands.

Practical example: A manufacturing company deploying predictive AI for supply chain optimization integrates programmable data planes, allowing it to automate deployment changes securely as workloads fluctuate.

Taming the chaos

While dystopian visions of AI superintelligence dominate headlines, businesses face a far more pressing reality within their application ecosystems—the operational complexity that’s made even more chaotic by the unmanaged, explosive growth of AI. This is the true challenge enterprises must overcome.

The F5 Application Delivery and Security Platform helps organizations tame the Ball of Fire, while reinventing how they use AI.

Completely extinguishing the Ball of Fire may be a practical impossibility, but taming it is no longer optional—it’s essential for enterprises looking to unlock the promise of AI and create an agile foundation that will future-proof innovation.

Unified platforms like the F5 Application Delivery and Security Platform enable organizations not only to tame the chaos of complex application ecosystems but to reinvent how they use AI to drive scalable, profitable transformation at every layer. AI is the future, but only if deployed securely, efficiently, and responsibly.

Tame the chaos. We can help.

Unlock the promise of AI today.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.