As organizations increasingly adopt AI technologies, they’ve found that keeping large language models (LLMs) up to date with their latest proprietary data can be a challenge. This has led to adoption of retrieval-augmented generation (RAG), which adds supplemental data to enhance AI inference for more accurate and useful responses. Getting this additional data to the LLM securely can prove challenging, requiring a solution for secure connectivity.

The RAG revolution in enterprise AI

Traditional LLMs, while powerful, are limited by their training data and can't access organization-specific information. RAG enables LLMs to query external knowledge sources during generation, producing outputs that reflect both the model's broad knowledge and an organization's proprietary data.

However, as most organizations have moved to multicloud, hybrid IT environments, their data is often spread across multiple sources. This distribution makes it difficult to implement RAG effectively, as organizations must ensure secure access to all relevant data sources while maintaining performance and cost efficiency at scale. Solving this challenge requires a means to securely connect data stores across all sources with LLMs, which is why F5, NetApp, and Google Cloud have come together to offer a solution.

A basic RAG workflow which includes a retriever to obtain relevant data from a large language model, combining it with the context needed to generate accurate, contextually relevant information.

A unified approach to data access

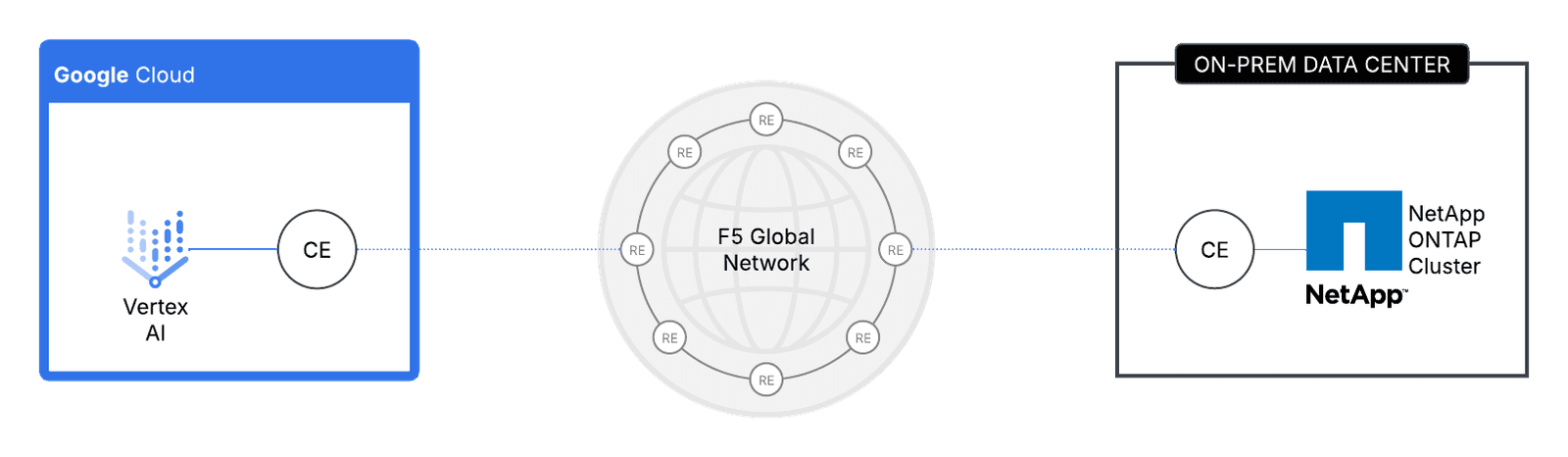

F5 Distributed Cloud Services include secure multicloud networking to connect apps and data across clouds and on-premises environments. Using the private backbone of the F5 Global Network, F5 Distributed Cloud Network Connect works with NetApp to quickly and securely move and store data where and when it’s needed.

Specifically for RAG, Distributed Cloud Network Connect links NetApp storage in the cloud or on premises to LLMs, including Google Cloud's Vertex AI platform, for secure, fast, and relevant inference. This solution creates a seamless framework for accessing distributed data sources across hybrid and multicloud environments.

F5's secure multicloud networking capabilities serve as the foundation, enabling efficient, protected data access across different environments while maintaining centralized observability and orchestration. Organizations can use Distributed Cloud Network Connect with Google Cloud NetApp Volumes, NetApp Cloud Volumes ONTAP for Google Cloud, and other NetApp data storage systems, providing secure connectivity and fast performance needed for AI workloads.

Google Cloud's Vertex AI platform completes the solution by leveraging this unified data access to facilitate RAG when developing context-augmented LLM applications. The platform's efficient resource utilization and secure AI infrastructure help organizations maintain cost-effectiveness while scaling their AI operations.

This joint solution delivers several advantages:

- Enhanced security: Multicloud networking from F5 has security built in, including web application firewalls, bot defense, and API protection, as well as consistent policy enforcement to defend proprietary data and AI models, even across complex hybrid environments.

- Encrypted networking: Layer 3 connections between AI models and NetApp storage (both on premises and cloud-based) are encrypted to protect sensitive data in transit.

- Optimized performance: With points of presence around the world, the F5 Global Network minimizes latency and offers control over transfer speeds for responsive AI applications.

- Simplified multicloud operations: Organizations can manage security and networking from cloud to on premises to edge with the F5 Distributed Cloud Console and let Distributed Cloud Network Connect handle the complexities of multicloud networking.

- Tool rationalization: Reduce the number of security and networking tools required to support RAG and AI.

F5, NetApp, and Google Cloud have come together to help customers securely implement RAG across hybrid and multicloud environments.

A simplified path to AI productivity

Organizations can leverage their existing investments in F5, NetApp, and Google Cloud infrastructure to create a secure RAG solution, making it a practical choice for enterprises looking to enhance their AI capabilities. The partnership enables organizations to leverage their data for AI-driven insights while maintaining security and control. By simplifying data access for RAG applications, organizations can focus on deriving value from AI rather than managing infrastructure complexity.

F5 Distributed Cloud Services are available from the Google Cloud Marketplace.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.